Meta’s Massive Update: A Shift In Content Moderation

Meta is making major changes to how content is moderated on Facebook, Instagram, and Threads, and it affects everyone- individual users and business pages alike. Here’s what you need to know!

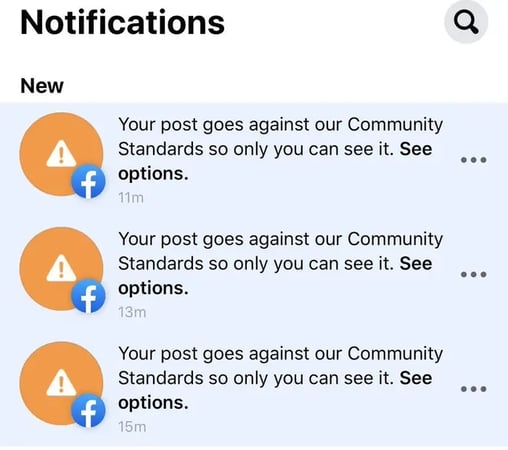

Meta admits its moderation policies have become too restrictive. Too much content is being censored or wrongly flagged. Innocent posts and pages are being taken down with no warning and no ability to get them back up. Based on this feedback, Meta has ended third-party fact-checking in the US, and will move towards launching Community Notes, a system similar to X (formerly Twitter). This means users will add and rate context on posts instead of external fact-checkers- essentially, fact checking is still happening, but by the Meta community.

Notes will require agreement from people across diverse perspectives. Meta won’t write or control the notes—users will.

Example of how Community Notes works on X/Twitter

Meta also plans on loosening restrictions on hot topics, reducing automated takedowns for minor violations, and providing more reviews from actual humans (as well as multiple reviewers) before actually removing content. The company claims to be adding more staff in order to handle this influx of appeals, and will also utilize AI to provide a second opinion before removing content.

Another big update: users will be able to control how much political content they see in their feed, compared to 2021, when Facebook reduced political content across the board (supposedly). Facebook, Instagram & Threads will use engagement signals to adjust content recommendations- basically, how you engage with political content shown on your feed will tell Meta whether or not to show you more of the same type.

So, what does this mean for us? Here's our take:

There are definitely pros to this move. Less censorship will hopefully result in fewer wrongful takedowns and fewer 'Facebook jail' incidents for both users and business pages. Additionally, a better appeals and transparency process is much-needed. If it works correctly and is applied equitably, it will improve the user experience across all Meta platforms and reduce the general sense of frustration that pops up when users face yet another denial or automated takedown message with no option for a human review.

But, like everything, there are cons. False information could spread more easily without fact-checkers, community notes may reflect dominant perspectives instead of facts, and looser moderation overall could mean more offensive content makes it onto your feed. Additionally, the human element in appeals reviews, although useful, could slow reaction and response time to actual violations.

And finally, as we all know: Meta has a history of rolling out features that don’t work as planned. So we'll be interested to see how this new direction plays out!

What are your thoughts on this new update? Head on over to our online group and see what 1,000+ more small business owners have to say!

.png?width=624&height=427&name=Untitled%20design%20(3).png)